Multimodal Emotion Dynamics in the 2024 U.S. Presidential Debate (Under Review, Currently in R&R)

This project investigates how emotions circulate during live-streamed political debates, focusing on the 2024 U.S. Presidential debate between Kamala Harris and Donald Trump. Unlike traditional broadcast debates, live-streaming platforms introduce interactive chat features, creating “affective publics” where viewers collectively express and amplify emotions in real time.

To analyze these dynamics, the study applied a multimodal computational framework:

Speech Emotion Recognition (SER): deep learning models to detect emotional tone in candidates’ voices.

Facial Emotion Recognition: computer vision to analyze candidates’ expressions across debate segments.

Text Emotion Classification: transformer-based models to classify emotions in over 58,000 YouTube live chat messages.

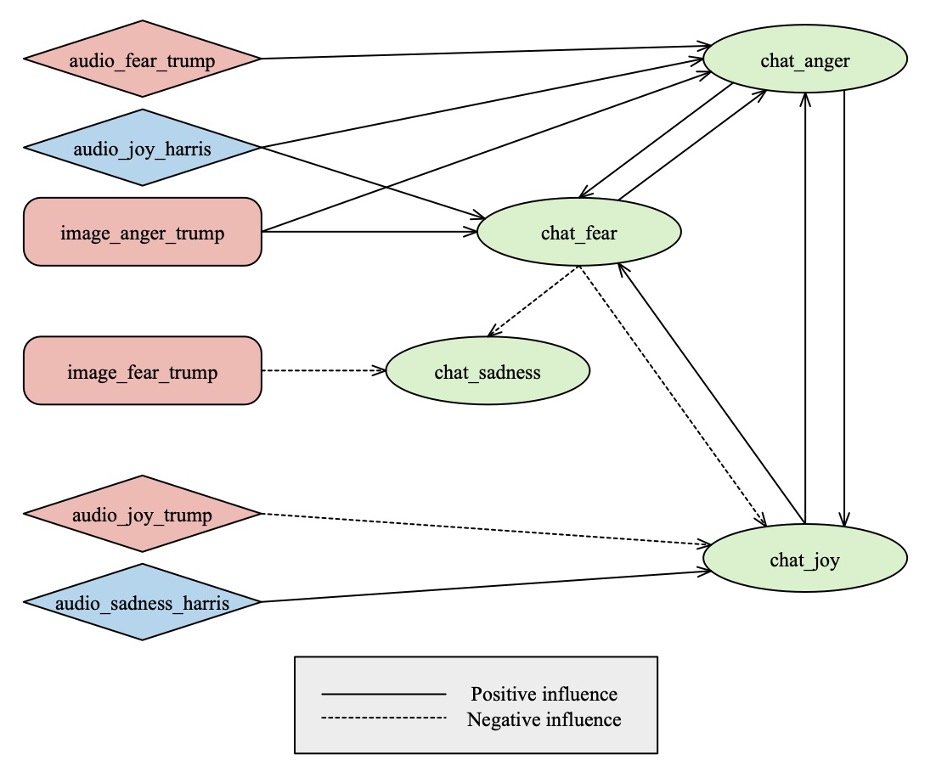

Time Series Modeling: vector autoregression (VAR) to capture how candidate cues and audience emotions interact over time.

Key findings:

Candidates’ emotional expressions significantly shaped live chat sentiment. For example, anger expressed by Trump in voice or expression amplified anger and fear in the chat.

Emotions within live chat reinforced each other, creating feedback loops that heightened the overall emotional climate.

Harris maintained composure with steady sadness/joy expressions, while Trump’s volatility (anger and sadness spikes) drove more reactive audience responses.

These results underscore the power of emotional contagion in shaping public opinion and the importance of studying synchronous, multimodal interactions in political communication.

Stay tuned for publication!